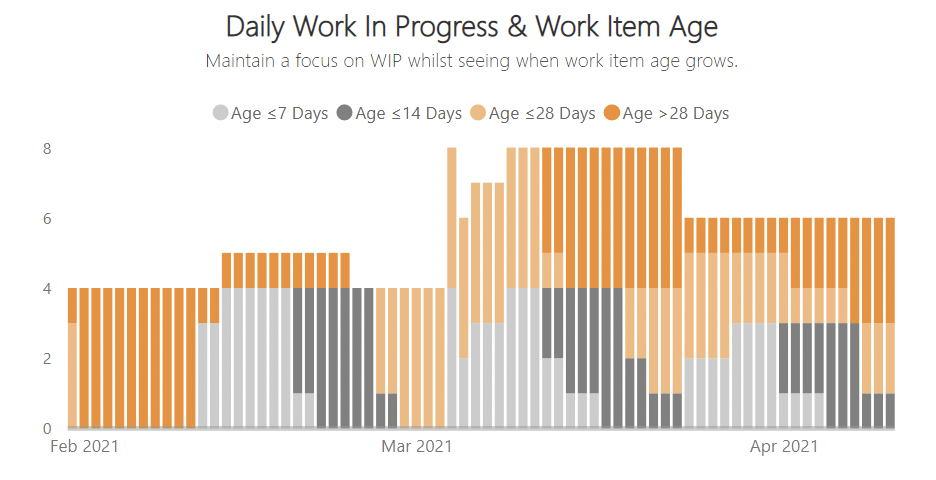

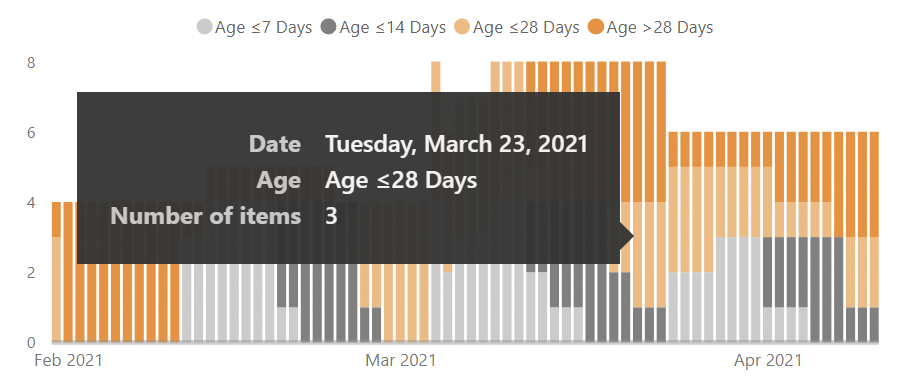

FlowViz is a free data visualisation template for Power BI that allows you to better visualize flow metrics from the data your teams’ Kanban board generates in Azure DevOps, Azure DevOps Server, TFS or GitHub Issues. The chart contains industry recognised flow metrics (Throughput, Work In Progress, Cycle Time, Work Item Age) as well as probabilistic forecasting/Monte Carlo Simulation for answering “when will it be done” or “what will we get”. It is inspired by the work of many leaders in our field, such as Larry Maccherone, Troy Magennis, Dan Vacanti and Prateek Singh.

Since launching in 2019, it has been downloaded hundreds of times and continues to be one of the most popular tools in the marketplace for flow metrics.

I'm pleased to announce the next iteration of the product: FlowViz Fundamentals course.

FlowViz Fundamentals provides a comprehensive overview of every chart within FlowViz, detailing how they are calculated, what to look for in the charts, exercises where you have different teams' charts presented and you have to observe what you can identify, as well as follow on videos where I explain what the patterns/anti-patterns are.

The course is online and self-study, allowing you to go at your own pace and contains over 50 videos for you to work through. No additional downloads are needed, with all the videos and example exercises (with sample data) built into the course.