Agile not WAgile

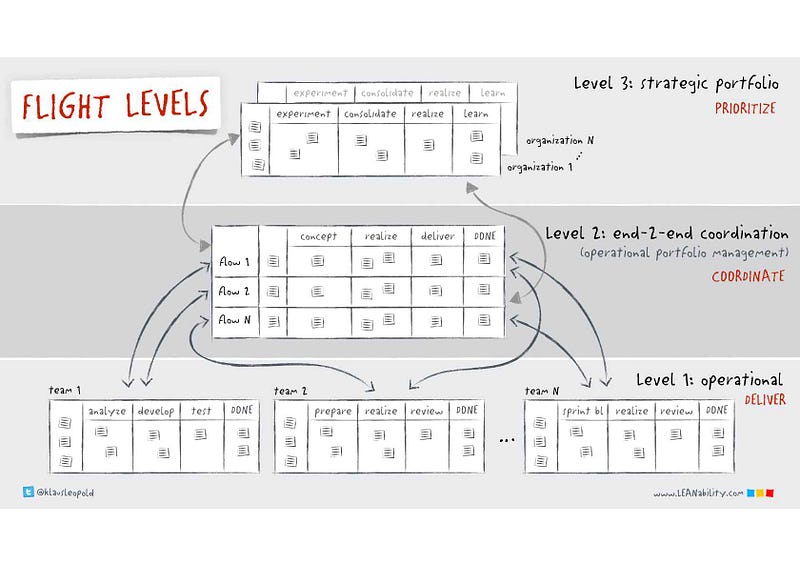

This week we’ve been reviewing a number of our projects that are tagged as being delivered using Agile ways of working within our main delivery portfolio. Whilst we ultimately do want to shift from project to product, we recognise that right now we’re still doing a lot of ‘project-y’ style of delivery, and that this will never completely go away. So we’re trying to in parallel at least get people familiar with what Agile delivery is all about, even if delivering from a project perspective.

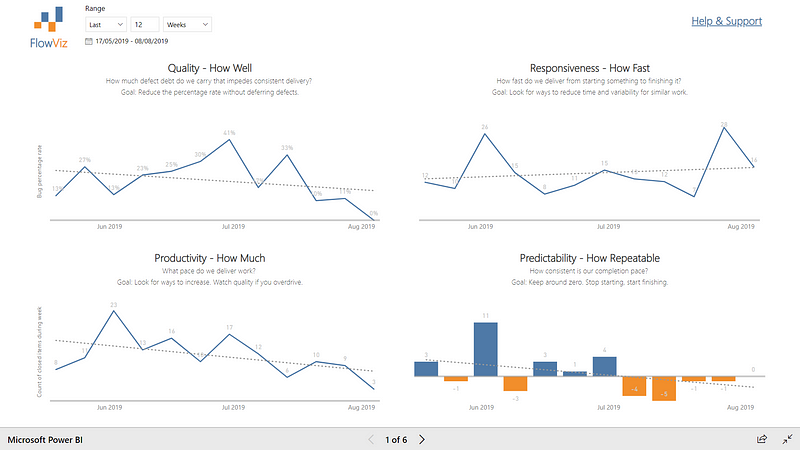

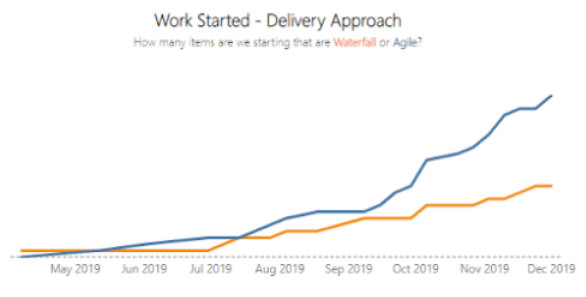

The catalyst really for this was one of our charts where we look at the work being started and the split between which of that is Agile (blue line) Vs. Waterfall (orange line).

The aspiration being of course that with a strategic goal to be ‘agile by default’ the chart should indeed look something like it does here, with the orange line only slightly creeping up when needed but generally people looking to adopt Agile as much as they can.

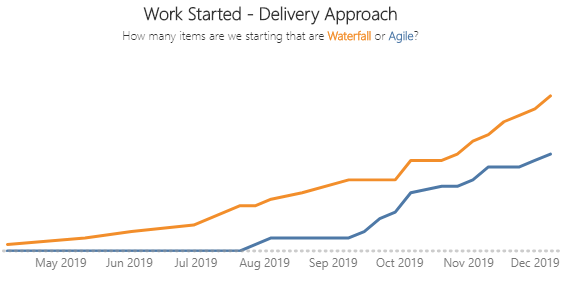

When I saw the chart looking like the above last week I must admit, I got suspicious! I felt that we definitely were not noticing the changes in behaviours, mindset and outcomes that the chart would suggest, which prompted a more thorough review.

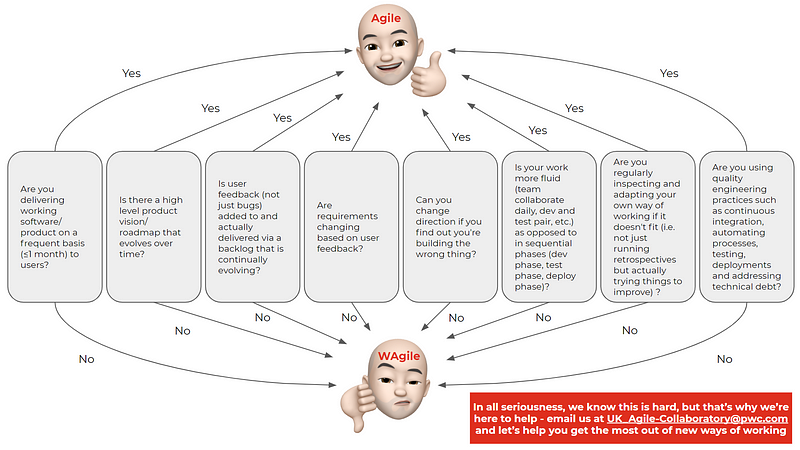

The review was not intended to act as the Agile police(!), as we very much want to help people in moving to new ways of working, but to really make sure people had understood correctly around what Agile at its core really is about, and if they are indeed doing that as part of their projects.

The review is still ongoing, but currently it looks like so (changing the waterfall/agile field retrospectively updates the chart):

The main problems observed being things such as lack of frequent delivery, with project teams still doing one big deployment to production at the end before going ‘live’ (but lots of deployments to test environments). Projects are maybe using tools such as Azure DevOps and some form of Agile events (maybe daily scrums), but work is still being delivered in phases (Dev / Test / UAT / Live). As well as this, a common theme was not getting early feedback and changing direction/priorities based on that (hardly a surprise if you are infrequently getting stuff into production!).

Inspired by the Agile BS detector from the US Department of Defense, I prepared a one-pager to help people quickly understand if their application of Agile to their projects is right, or if they need to rethink their approach:

Here’s hoping the blue line goes up, but against some of that criteria above, or at least we get more people approaching us for help in how to get there.

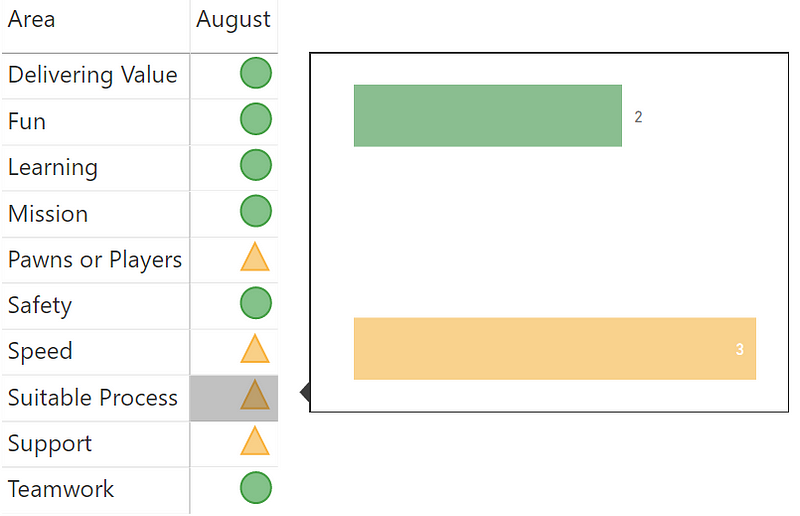

Team Health Check

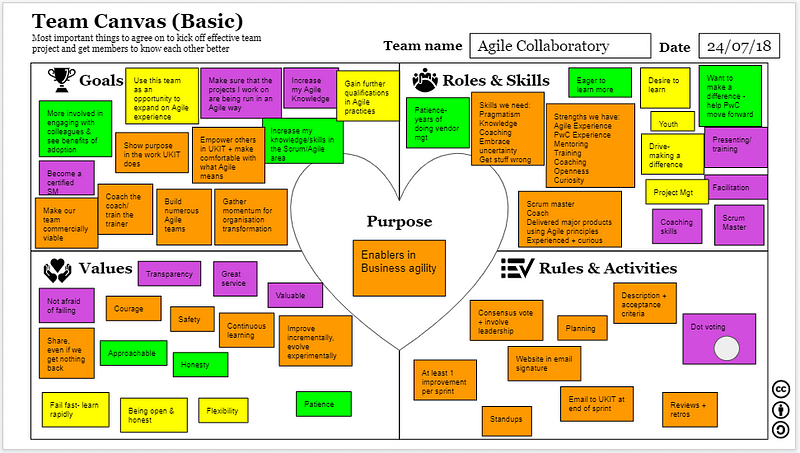

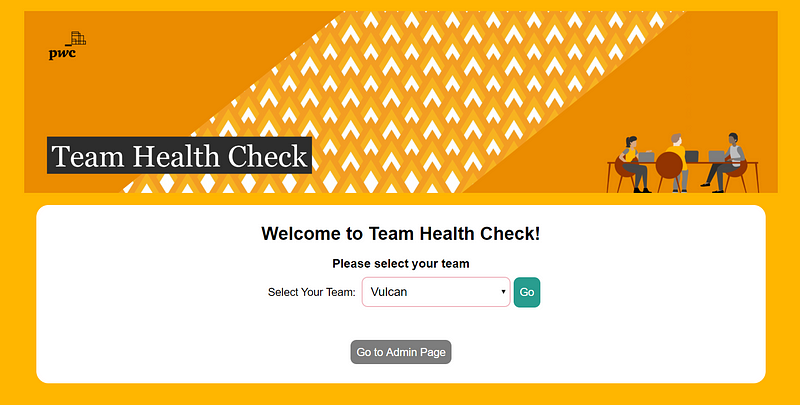

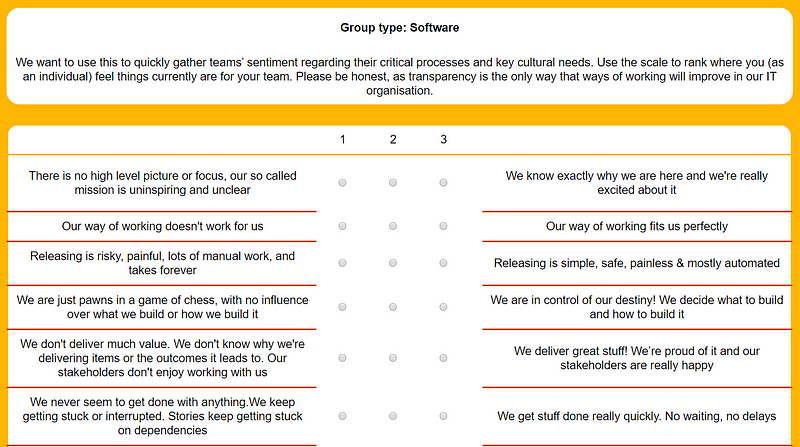

This week we had our sprint review for the project our grads are working on, helping develop a team health check web app for teams to conduct monthly self assessments as to different areas of team needs and ways of working.

Again, I was blown away by what the team had managed to achieve this sprint. Not only had they managed to go from a very basic, black and white version of the app to a fully PwC branded version.

They’ve also successfully worked with Dave (aka DevOps Dave) to configure a full CI/CD pipeline for any future changes made. As the PO for the project I’ll now be in control of any future releases via the release gate in Azure DevOps, very impressive stuff! Hopefully now we can share more widely and get teams using it.

Next Week

Next week will be the last weeknotes for a few weeks, whilst we all recharge and eat lots over Christmas. Looking at finalising training for the new year and getting a run through from Rachel in our team of our new Product Management course!